4-node Hyper-Converged Supermicro cluster. Storage is provided by CEPH. NVMe (default) and spinning HDD available.

Better self-service sometime™: https://git.k-space.ee/k-space/todo/issues/127

¶ For user

- Appliances not providing services to K-SPACE itself will not get a

xyz.k-space.eedomain name! - K-SPACE does not advertise an SLA! While outages have historically been once in 2-3 years, no responsibility is taken for the VMs acting out and rebooting, or the network.

- How to Ubuntu 22.04 → 24.04 LTS: https://ubuntu.com/server/docs/how-to-upgrade-your-release

¶ How to get an Debian LTS VM:

- Have an eligible membership and/or write info@k-space.ee what you need.

- Sign in to pve.k-space.ee

- Send an e-mail to info@k-space.ee, include:

- briefly, what are you planning to do with the VM

- SSH public keys

- optional: non-default username for ssh

- is public IPv4 needed (otherwise IPv6 + LAN IPv4)

- do you need extra vCPU, memory (4 GiB) or storage (100 GB) (see VM pricing)

- Access the hypervisor at pve.k-space.ee. Select 'Pool view':

Default SSH username is ubuntudebian.

Default IPv6 is currently a single-IP abnormality, fitting for the Zoo network. As long as it doesn't conflict with existing IPs, you are welcome to change it.

- See also: IPv6 prefix delegation

- See also: K-SPACE network

¶ qemu-guest-agent

Used to:

- Improve migrations of VM between hosts, sync notifications.

- Check and notify if an absurd number of security updates are available.

If you remove or disable qemu-guest-agent:

- Disable it under VM → Options.

- Reboot the machine

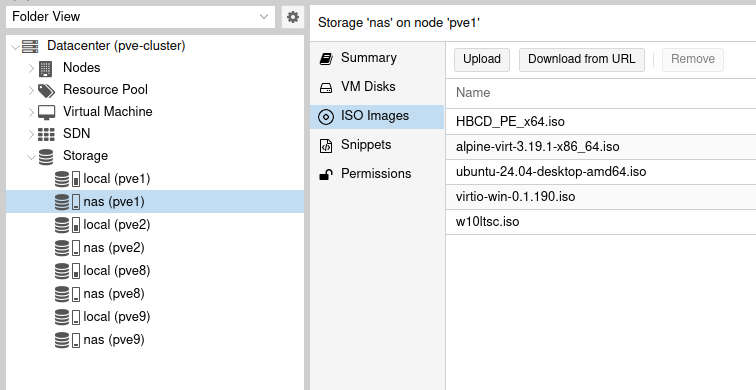

¶ Using ISOs

pve-fs ISO storage is shared and accessible to all PVE users. Integrity of images is not guaranteed!

- Select Folder or Server View.

- Upload an ISO to

pve-fsstorage →ISO Images.

- Your VM → Hardware → Double click CD/DVD drive → Select storage.

- Booting: If Your VM → Options → Boot Order is locked, you can still press Esc in Console while booting (similar to F12 on physical hardware).

- Cleanup: Hardware → CD/DVD: Do not use any media and Shutdown/Stop the VM. It'd be nice to clean up unused ISOs yourself. On periodic ISO cleanups, if your VM still had it attached, migrations will fail and your VM will be stopped out of your control.

¶ For cluster administrator

Reserved for Proxmox VMs with IDs 100..199:

IPv4 193.40.103.100 .. 193.40.103.199

IPv6 2001:bb8:4008:20::100/64 .. 2001:bb8:4008:20::199/64

Cluster-wide mac prefix: bc:24:11 (default)

- Most non-user docs is and moving to https://git.k-space.ee/k-space/ansible/src/branch/main/proxmox

- Please sync VM start/stop state with VM → Options → Start at boot. (TODO: PVE 9.1 promises to do this for HA VMs, check if it is this).

- The OS tags (d12, ub24_f20) are for tracking which template the VM originates from. Do NOT add them to custom VMs.

¶ Creating VM

- Full clone from template.

- VMID for EENet: 1xx (193.40.103.1xx)

- VMID for Elisa: 62xx (62.65.250.xx)

- VMID for local: 2yxx (172.2y.)

- Populate username in description.

- If possible, name the VM with username-something. If you have trouble finding a something, seek lehmad, lemmad or mnemonics.

- Cloud-init → IPv4 and IPv6 address from …99 to VMID.

- VMID

155gets193.40.103.155,2001:bb8:4008:20::155.

- VMID

- Permissions → Add User permission, role

K-SPACE_VM_USER. - Populate cloud-init credentials.

- (top right) More → Manage HA: Group=pve9x, Request State=started

- Mail user:

- https://wiki.k-space.ee/en/hosting/proxmox

ssh debian@[2001:bb8:4008:20::155]← change to match config

Note: CPUs are set higher to allow hotplugging to more, vCPU and resource limits are what matter.

¶ Updating major versions

- Coordinate with others to not mess with their 5-figure projects

- Check patch notes as usual

- After update:

- Comment enterprise repo in

/etc/apt/sources.list.d/, - then add

deb http://download.proxmox.com/debian/pve bullseye pve-no-subscription, make sure to update the codename. - Ensure https://pve.proxmox.com/wiki/Firmware_Updates#_set_up_early_os_microcode_updates

- Comment enterprise repo in

¶ Recovering from migration problems.

This has not happened in years.

-

Failed.

2021-02-21 17:44:11 migration status: completed 2021-02-21 17:44:14 # /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=pve1' root@2001:bb8:4008:21:172:21:20:1 qm unlock 156 2021-02-21 17:44:14 ERROR: failed to clear migrate lock: Configuration file 'nodes/pve1/qemu-server/156.conf' does not exist 2021-02-21 17:44:14 ERROR: migration finished with problems (duration 00:00:32) TASK ERROR: migration problems- Do not panic. The server is still online in the sender server.

- This guide is aimed at VMs, not containers. Containers differ from VMs, as such this guide is not fully compatible with containers.

- There's 4-7 components either fighting or out of sync. This is bad, and this is the most reliable way I found to get all working again.

-

Go to the shell of the sender, run:

qm unlock <id of the vm stuck, ex 111>If the command output is

Configuration file 'nodes/pve2/qemu-server/156.conf' does not exist, run it on the recieving end instead. If that succeeds fine, you don't have to hibernate. -

Hibernate the VM in question on the sender node. (broadcasts and sets/overwrites various doings of the VM, hibernate is the least costly)

- There will be around a minute or two downtime for the VM. Coordinate with the owner if possible (during working hours).

- No data will be lost, including those in memory.

-

Resume the VM.

-

Re-attempt migration. This attempt will fail. (cleanup)

- You should now be where you started. Best of luck.

-

Re-attempt migration.

¶ Chroot without booting VM

Part of sweaty old documentation, no use with current tooling.

modprobe nbd max_part=8

qemu-nbd --connect /dev/nbd0 /mnt/pve/nas/images/9028/vm-9028-disk-0.raw

mkdir /mnt/cr

mount /dev/nbd0p2 /mnt/cr

mount -o bind /proc /mnt/cr/proc

mount -o bind /sys /mnt/cr/sys

mount -o bind /dev /mnt/cr/dev

mv /mnt/cr/etc/resolv.conf /mnt/cr_resolv.conf

cp /etc/resolv.conf /mnt/cr/etc/resolv.conf

chroot /mnt/cr

exit

umount /mnt/cr/{proc,sys,dev}

rm /mnt/cr/etc/resolv.conf

mv /mnt/cr_resolv.conf /mnt/cr/etc/resolv.conf

umount /mnt/cr

qemu-nbd --disconnect /dev/nbd0

rmmod nbd